In March 2020, a historical moment occurred in the desert sky above a battlefield in the Libyan desert. The incident unfolded as soldiers from the Government of National Accord battled troops known as the Haftar Affiliated Forces (HAF). According to a United Nations Security Council report, as HAF forces retreated, they were hunted down and engaged by an AI-powered autonomous weapons system. This is believed to be the first documented instance of AI in warfare taking a human life.

What are Autonomous Weapons Systems?

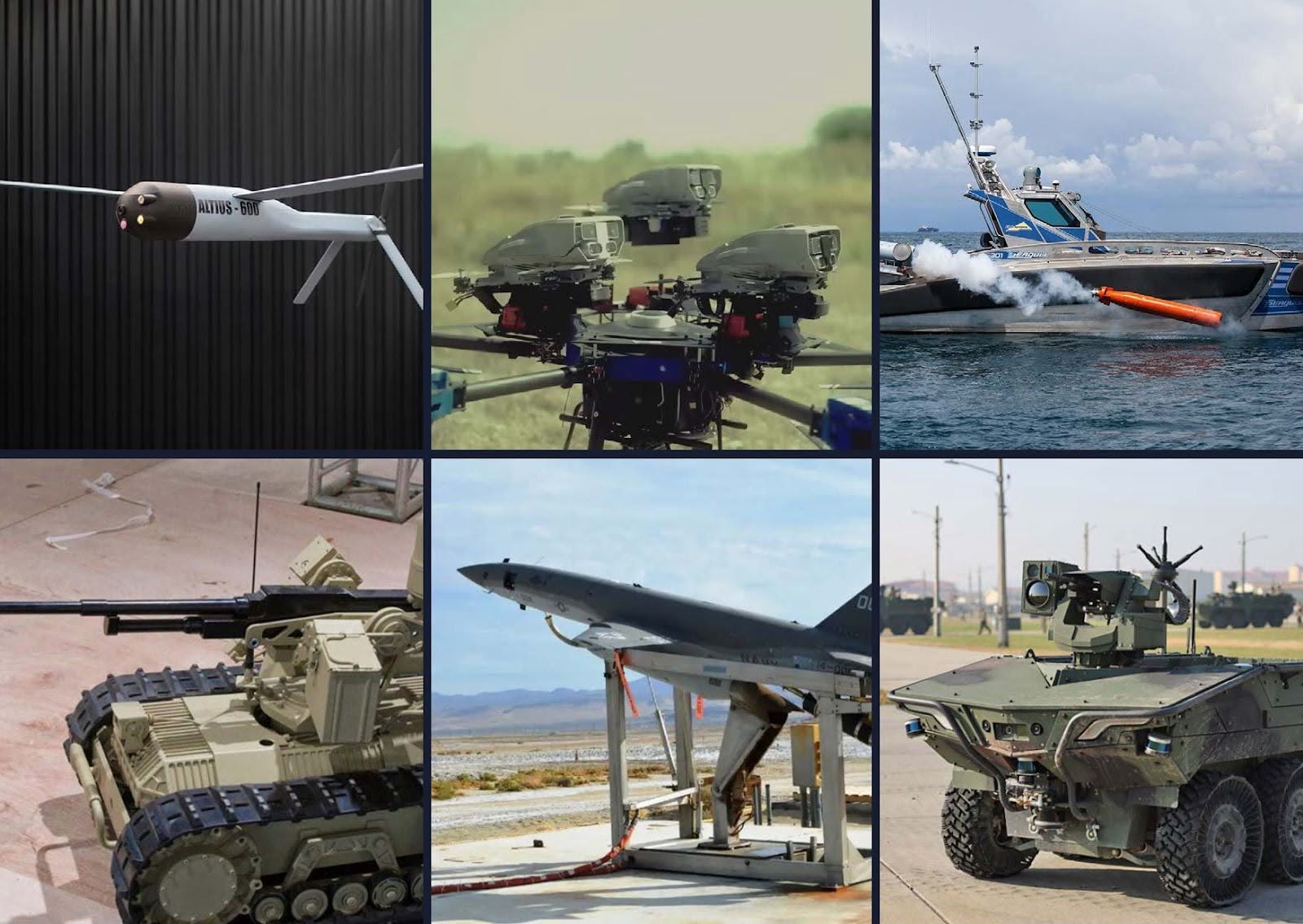

Autonomous weapons systems are capable of selecting and applying force to targets independent of human control or oversight. Unlike unmanned military drones, where a human operator remotely decides to take a life, algorithms make this decision independently. As the UN Security Council report noted, these systems were “programmed to attack targets without requiring data connectivity between the operator and the munition—in effect, achieving a true ‘fire, forget, and find’ capability.”

Whether the incident described above is truly the first time an autonomous weapon killed an enemy combatant remains uncertain. What is clear, however, is that it was not the last use of AI in war. In February 2024, Ukrainian President Volodymyr Zelenskyy announced the establishment of a new branch of the military: the Unmanned Systems Forces, dedicated entirely to deploying aerial, ground, and sea drones. Days later, his government revealed that Ukraine was on track to manufacture more than one million drones by the end of 2024. According to a July 2024 article from The New York Times, both sides in the conflict are actively developing and deploying autonomous weapons, with numerous startups rapidly advancing drones capable of autonomously tracking and attacking targets.

How AI in the Military is Changing Warfare

Autonomous weapons present a multitude of risks that demand urgent attention. These systems can carry out actions leading to widespread destruction and the loss of innocent lives without human intervention, making it difficult to assign responsibility for war crimes. When an algorithm makes a life-or-death decision, who is accountable—the programmer, the operator, the military commander, or the state? This uncertainty undermines legal frameworks, complicates accountability, and erodes international justice.

Autonomous weapons also pose a serious threat to global stability. They are often trained on classified data and lack transparency in their decision-making processes. When deployed, they may interact with enemy systems in unexpected ways, leading to sudden and unintended escalations. We have already witnessed how quickly an error in an automated system can escalate in the economy. Most notably, in the 2010 Flash Crash, a feedback loop between automated trading algorithms amplified ordinary market fluctuations into a financial catastrophe in which a trillion dollars of stock value vanished in minutes. By automating our militaries, we risk “flash wars.” The market quickly recovered from the 2010 Flash Crash, but the harm caused by a flash war could be catastrophic.

The relatively low production costs of autonomous weapons make them appealing to non-state armed groups, enabling their use in genocides or the targeted assassinations of political and military leaders. This growing is accessibility of advanced weaponry could destabilize regions and worsen global conflicts. Autonomous weapons are also uniquely vulnerable to cyberattacks. Hackers could infiltrate these systems, manipulating their behavior or redirecting their targets, creating devastating consequences. Such vulnerabilities open up new fronts in warfare, where cyberattacks could be just as deadly as physical ones, further complicating military operations.

The Ethical Concerns of AI-Powered Weapons in Combat

From an ethical perspective, the use of autonomous weapons raises profound concerns. Delegating such decisions to machines dehumanizes warfare and violates deeply held moral principles. Many religious leaders have condemned their use. While addressing G7 leaders, Pope Francis called for an “effective and concrete commitment to introduce ever greater and proper human control,” stating that “No machine should ever choose to take the life of a human being.” Public opinion largely aligns with this stance as polls consistently show that a majority of people view autonomous weapons as unethical.

Finally, the unpredictability of autonomous weapons adds another layer of risk. These systems rely on algorithms that respond to environmental stimuli, making their behavior difficult to anticipate or control. This unpredictability not only heightens the potential for unintended casualties but also undermines the strategic reliability of military operations.

The convergence of these issues—accountability gaps, global instability, ethical dilemmas, cyber vulnerabilities, and operational unpredictability—makes the proliferation of autonomous weapons deeply problematic. These systems challenge the boundaries of technology, ethics, and international security in ways the world is only beginning to understand. Consequently, there is an urgent global push to regulate and control them.

For further information on the ongoing efforts to establish international regulations on autonomous weapons, see our post Milestones in the Global Legal Framework for Autonomous Weapons.